Amazon Data Firehose

Amazon Data Firehose is a fully managed service for delivering (near?) real-time streaming data to destinations.

Available sources:

-

Read:

-

Kinesis Stream

-

Amazon Managed Streaming for Apache Kafka (MSK)

-

-

Direct PUT:

-

AWS SDK

-

AWS Lambda

-

AWS CloudWatch Logs

-

AWS CloudWatch Events

-

AWS Eventbridge

-

AWS Cloud Metric Streams

-

AWS IOT

-

Amazon Simple Email Service

-

Amazon SNS

-

AWS WAF web ACL logs

-

Amazon API Gateway - Access logs

-

Amazon Pinpoint

-

Amazon MSK Broker Logs

-

Amazon Route 53 Resolver query logs

-

AWS Network Firewall Alerts Logs

-

AWS Network Firewall Flow Logs

-

Amazon Elasticache Redis SLOWLOG

-

Kinesis Agent (linux)

-

Kinesis Tap (windows)

-

Fluentbit

-

Fluentd

-

Apache Nifi

-

Snowflake

-

Available destinations:

-

S3

-

Amazon Redshift (Copy through S3)

-

Amazon OpenSearch Service

-

Amazon OpenSearch Serverless

-

Splunk

-

Custom HTTP endpoint or HTTP endpoints owned by supported third-party service providers:

-

Datadog

-

Dynatrace

-

LogicMonitor

-

MongoDB

-

New Relic

-

Coralogix

-

Elastic

-

A Firehose Stream is at the centre of this service, with producers sending data to it (up to 1 MB per record). Data is BUFFERED and sent once the Buffer Size or the Buffer Interval is reached.

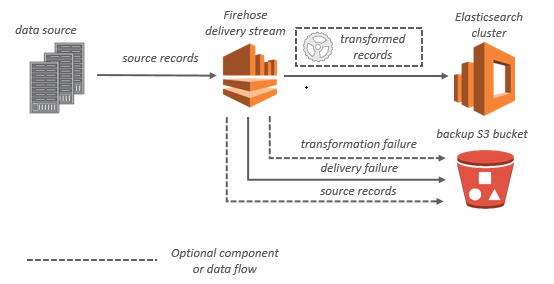

You can also configure Amazon Data Firehose to transform your data before delivering it and keep ORIGINAL data in a separate bucket along with FAILURES. The buffer size when transformation using Lambda is 1 MB, it can be adjusted but remember that Lambda max payload size for incoming requests in synchronous mode is 6 MB.

Buffering

Amazon Data Firehose buffers incoming streaming data in memory to a certain size (buffering size) and for a certain period of time (buffering interval) before delivering it to the specified destinations. You can configure the buffering size and the buffer interval while creating new delivery streams or update the buffering size and the buffering interval on your existing delivery streams. The values for Buffering Size and Buffering Interval depend on the destination.

Buffer Max Size: 128 MB

Buffer Max Interval 900s

For most destinations the defaults are 5 MB and 300s.