ElastiCache

Show slides

ElastiCache’s use case is to:

-

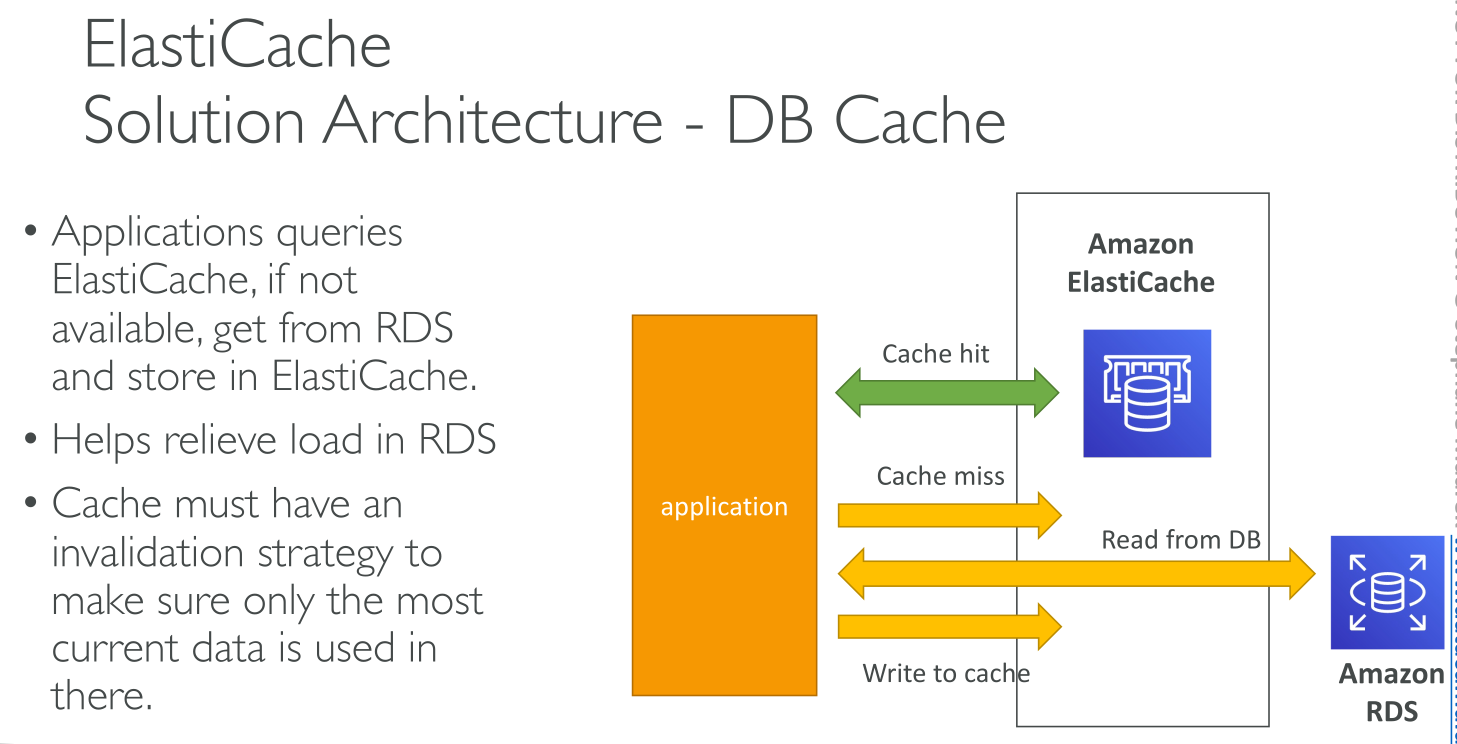

cache data for read-heavy workloads that require low latency because you reduce the load on databases, reducing costs.

-

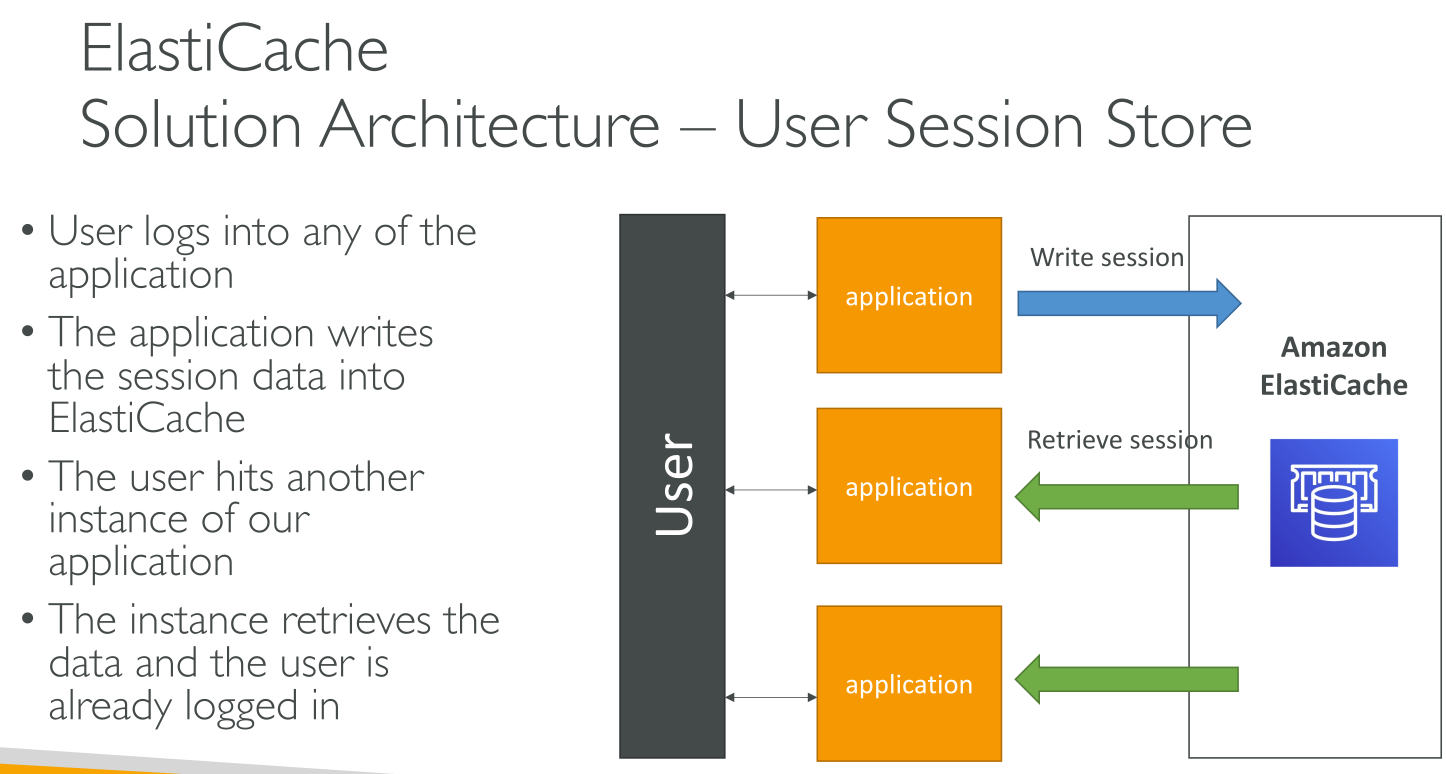

Help making servers stateless storing session data elsewhere.

In general using a cache comes with application changes.

Redis Vs. Memcached

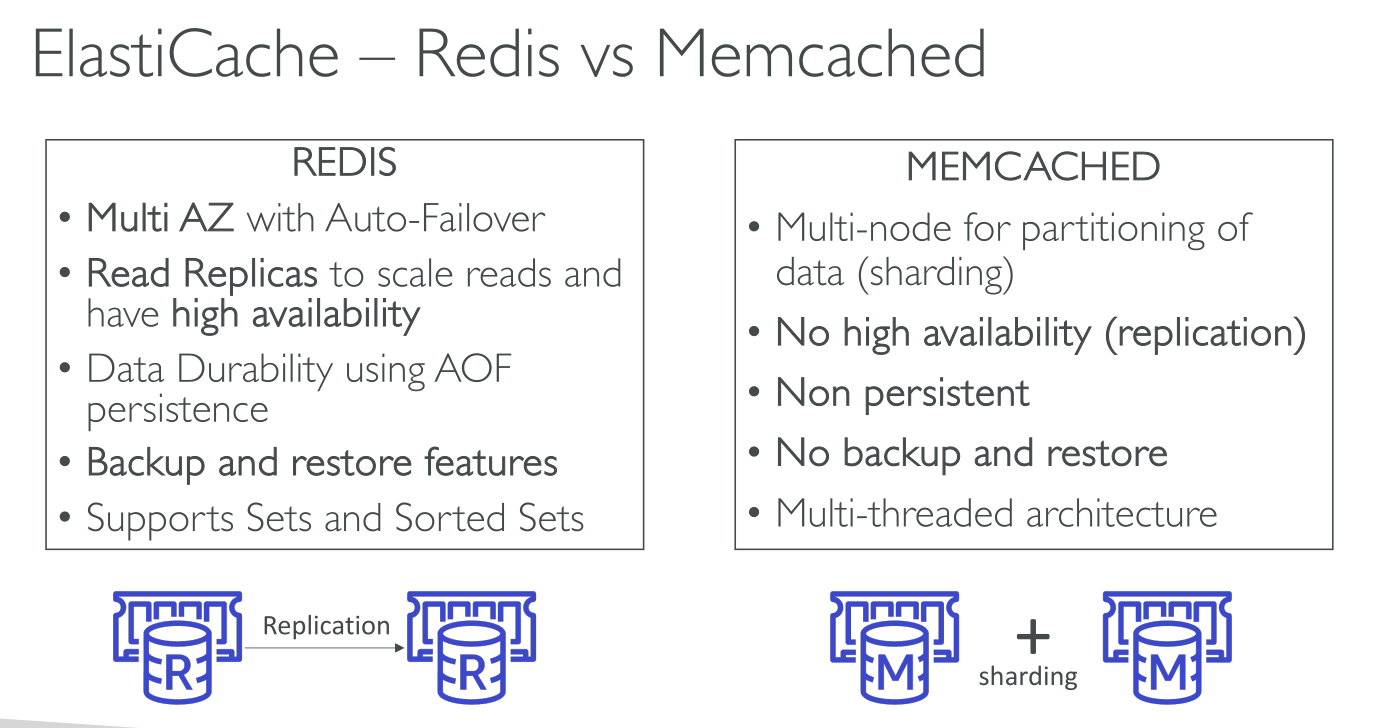

Memcached provides:

-

Simple structures

-

Milti-threaded execution

-

Sharding only, no replication

Redis provides:

-

Advanced structures

-

Multi-AZ

-

Replication ⇒ Read scaling

-

Backup and restore ⇒ Disaster Recovery

-

Transactions

Caching Strategies

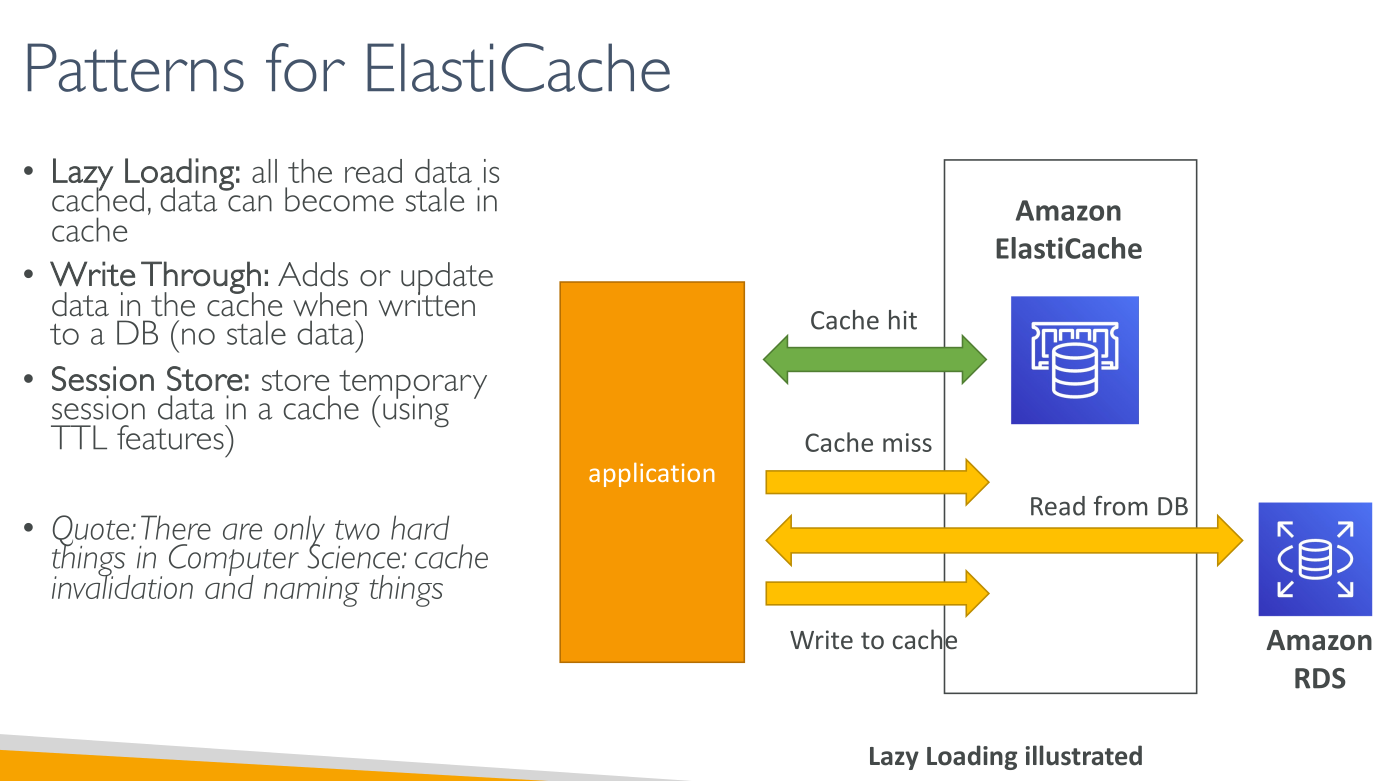

Lazy loading

Whenever your application requests data, it first makes the request to the ElastiCache cache. If the data exists in the cache and is current, ElastiCache returns the data to your application. If the data doesn’t exist in the cache or has expired, your application requests the data from your data store. Your data store then returns the data to your application. Your application next writes the data received from the store to the cache.

Advantages

-

Only requested data is cached. Because most data is never requested, lazy loading avoids filling up the cache with data that isn’t requested.

-

Node failures aren’t fatal for your application.

Disadvantages

-

Cache miss penalty. Each cache miss results in three trips:

-

Initial request for data from the cache

-

Query of the database for the data

-

Writing the data to the cache

-

-

Stale data. If data is written to the cache only when there is a cache miss, data in the cache can become stale. This result occurs because there are no updates to the cache when data is changed in the database.

Write-through

The write-through strategy adds data or updates data in the cache whenever data is written to the database.

Advantages

-

Data in the cache is never stale. Because the data in the cache is updated every time it’s written to the database, the data in the cache is always current.

-

Write penalty vs. read penalty. Every write involves two trips:

-

A write to the cache

-

A write to the database

-

Which adds latency to the process. That said, end users are generally more tolerant of latency when updating data than when retrieving data. There is an inherent sense that updates are more work and thus take longer.

Disadvantages

-

Missing data. If you spin up a new node, whether due to a node failure or scaling out, there is missing data. This data continues to be missing until it’s added or updated on the database. You can minimize this by implementing lazy loading with write-through.

-

Cache churn. Most data is never read, which is a waste of resources. By adding a time to live (TTL) value, you can minimize wasted space.

Adding TTL

Lazy loading allows for stale data but doesn’t fail with empty nodes. Write-through ensures that data is always fresh, but can fail with empty nodes and can populate the cache with superfluous data. By adding a time to live (TTL) value to each write, you can have the advantages of each strategy.

Time to live (TTL) is an integer value that specifies the number of seconds until the key expires.

This approach doesn’t guarantee that a value isn’t stale. However, it keeps data from getting too stale and requires that values in the cache are occasionally refreshed from the database.